Kafka Delivery Model

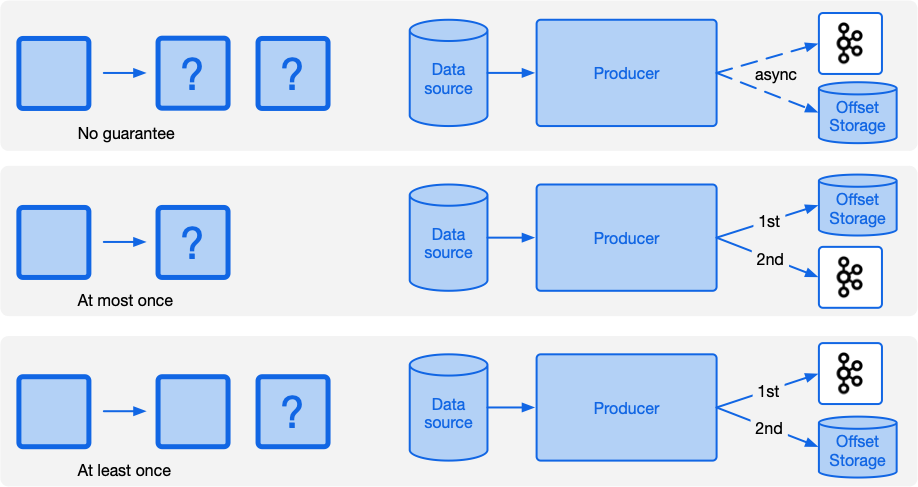

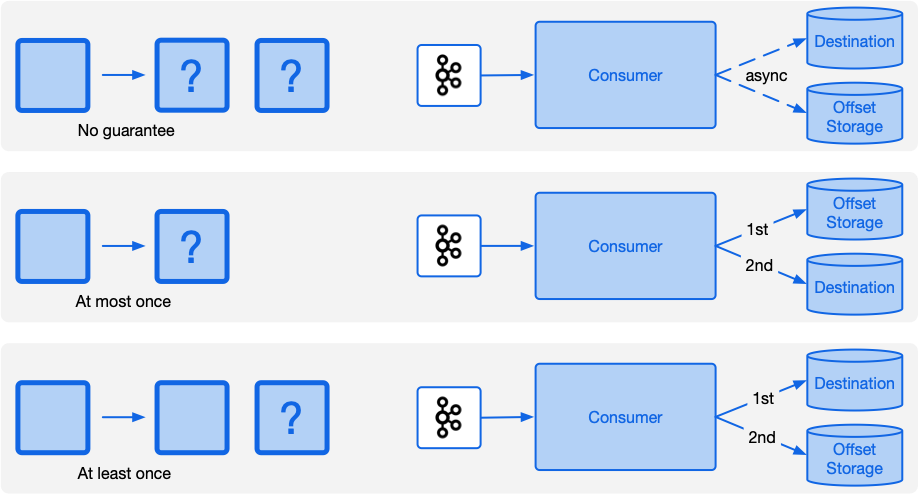

We have 3 delivery models:

- No guarantee

- At most once

- At least once

- Exactly once

No guarantee

It's fire and forget. The producer just fire the event, if the consumer get it we get it, else it doesnt care.

Consumer set enable.auto.commit = true which autocommiting the offset base on the auto.commit.interval.ms and asynchronously update the offset and the data.

Things could be fail when we store the database or when we commit the offset which we don't know. Either way, we either will:

- Have duplicate records:

- Success save database but commit offset fail

- Therefore reprocess the same thing and re-save into the database

- Have not proccess the records:

- Success commit but fail in the database

- Therefore consumer thought that it has saved it correctly

At most once

In here, we set enable.auto.commit = false because we're going to be the one who do the commit.

We basically commit in this sequence:

- Commit into kafka offset that we have processed the message

- Save things into database

So we're guarantee that it's at most once:

- If we failed at step 1, we retry.

- If we failed at step 2, we ignore (lost message)

- If we don't fail at any step, we delivered once

Use case

- No duplication happens

- There could be potential of lost message

- Very fast, suitable for work that we want to complete rather than caring about the result

At least once

In here again we set enable.auto.commit = false to commit ourself.

We basically commiting in this sequence:

- Saving in the database

- Save in the offset

Usecase

- Possible duplication happens

- We don't lose any message

- Suitable for medium workload.

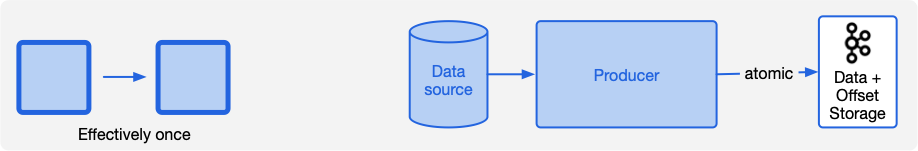

Effectively once

We now set enable.auto.commit = false because we want to commit ourselve.

We set enable.idempotence = true so that producer will send the pid (producer id) and sequence number which will be used to filter out duplication.

Client also need to set enable.idempotence = true. And we need to set transactional.id=my-tx-id

producer.initTransactions();

producer.beginTransaction();

sourceOffsetRecords.forEach(producer::send);

outputRecords.forEach(producer::send);

producer.commitTransaction();

Client can then set Isolation level just like ACID (Isolation). Note by default the Kafka client will have read_uncommited

Usecase

- Critical workload that needs to delivery exactly once guarantee.

- Much slower and more complicated to setup.

[!note]

This transaction mode only happen in kafka level. Consider doing atomic operation in database or enable transaction in database.